OpenAI has just rolled out their powerful GPT-4.1 and GPT-4.1 mini models to the ChatGPT interface, bringing enhanced coding capabilities and improved instruction following to millions of users.

In my experience testing these new models, I’ve found significant improvements over GPT-4o in several key areas.

However, there’s a major catch that many users aren’t aware of: while the API version boasts an impressive 1 million token context window, ChatGPT users are getting a significantly restricted experience.

This context window disparity creates a substantial gap between what developers can access via the API and what regular ChatGPT users experience.

I’ve been using the API version for a while now, and the difference is night and day when handling large documents or maintaining conversation history.

Let’s dive into what this means for you, how these models stack up against competitors like Claude 3.7 Sonnet and Gemini 2.5, and who should use which model for the best results.

GPT-4.1 and 4.1 Mini: What’s New in ChatGPT’s Latest Update

On May 14, 2025, OpenAI announced that GPT-4.1 and GPT-4.1 mini would be available directly within the ChatGPT interface.

According to the official release notes, this update represents a significant milestone in bringing their latest advancements to a broader audience.

The rollout has been tiered based on subscription level:

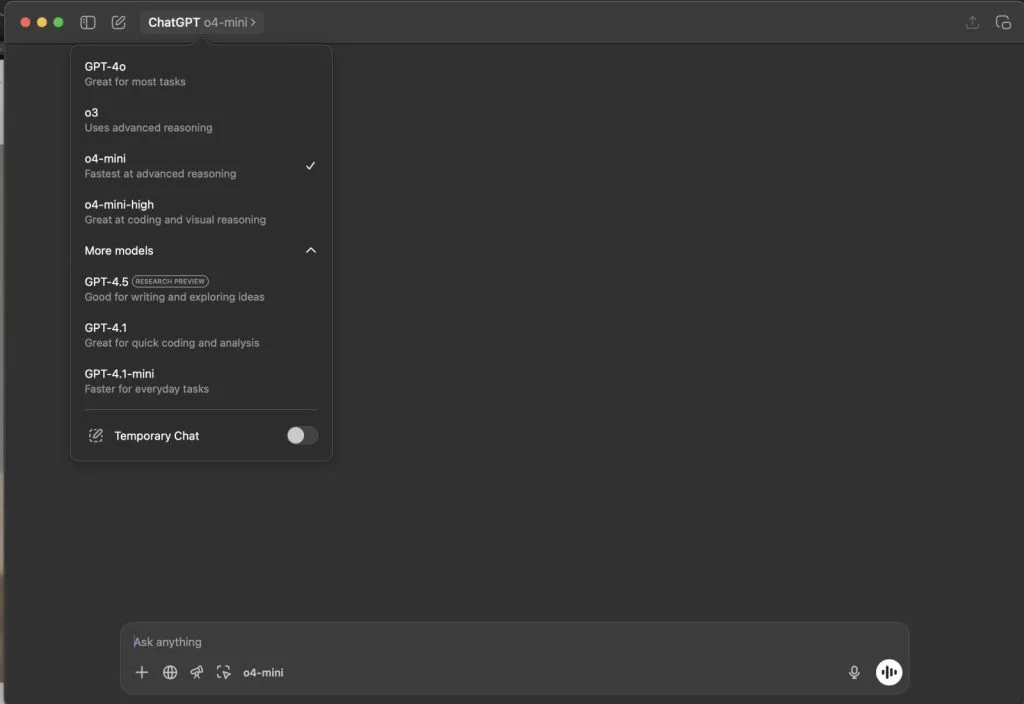

- ChatGPT Plus, Pro, and Team subscribers can now access the full GPT-4.1 model via the “More models” dropdown in the model picker

- Free users get access to GPT-4.1 mini, which has replaced the older GPT-4o mini as the default fallback model

- Enterprise and Edu users will receive access in the coming weeks

I’ve tested both models extensively, and they represent a notable step forward in several areas – particularly coding and instruction following.

What impresses me most is how GPT-4.1 parses complex instructions and maintains context throughout longer conversations, even with its limited context window in the ChatGPT interface.

The Million-Token Gap: ChatGPT vs. API Context Window Limitations

Here’s where things get interesting – and potentially frustrating for many ChatGPT users.

While OpenAI heavily promotes GPT-4.1’s impressive 1 million token context window, this capability is primarily reserved for API users.

Understanding Context Windows and Why They Matter

A model’s context window determines how much information it can consider simultaneously to generate a response.

Larger context windows generally enable:

- Better coherence across long conversations

- Deeper understanding of complex inputs

- The ability to process longer documents

- Maintenance of context over extended interactions

When I first started using the GPT-4.1 API with its full 1 million token context, the difference was immediately noticeable.

I could upload entire codebases, lengthy research papers, or detailed project specifications, and the model could reference any part of that information in its responses with remarkable accuracy.

The Reality for ChatGPT Users: Tiered Context Limitations

According to OpenAI’s official ChatGPT pricing page, ChatGPT users face significantly smaller context windows based on their subscription tier:

This creates a substantial gap between what the model is capable of and what users can access through the ChatGPT interface.

For example, as a Pro user, I’m limited to just 128K tokens – approximately one-eighth of the model’s full capacity.

However, the ChatGPT integration comes with the same limits as GPT-4o, suggesting these restrictions are likely related to computational costs and resource allocation.

GPT-4.1 vs. Competition: How It Stacks Up in the AI Arena

To get a clearer picture of how GPT-4.1 compares to other leading AI models, I’ve analyzed the latest benchmark data from LiveBench and real-world testing results.

GPT-4.1 vs. GPT-4o: Is It Worth Switching?

When comparing GPT-4.1 to its predecessor within the ChatGPT interface, several key differences emerge:

In my testing, I’ve found GPT-4.1 to be noticeably better at following complex instructions and generating precise code.

It also seems to hallucinate less frequently when asked factual questions.

However, GPT-4o still excels in multimodal tasks that involve analyzing images or generating visual content.

The Competitive Landscape: OpenAI vs. Anthropic vs. Google

Looking at the broader AI landscape, here’s how the latest models from major providers compare according to LiveBench data:

This comparison reveals some interesting insights.

While GPT-4.1 excels in coding and instruction following, Gemini 2.5 Pro and Claude 3.7 Sonnet Thinking demonstrate stronger performance in reasoning and mathematics.

Despite what the benchmarks suggest, I personally find Claude 3.7 Sonnet Thinking and Gemini 2.5 Pro to be superior for coding tasks in my day-to-day work.

Their reasoning capabilities often lead to more thoughtful and accurate code solutions, especially for complex problems.

Each model has its unique strengths, making them suitable for different use cases.

Both Gemini 2.5 models offer a full 1M token context to all users, while Claude 3.7 Sonnet provides 200K tokens – still greater than ChatGPT Pro’s 128K limit.

Real-World Performance: User Experiences and Practical Applications

Beyond benchmarks, I’ve analyzed user reports and tested these models in practical scenarios to understand their real-world performance.

Coding and Development Tasks

GPT-4.1 truly shines when it comes to coding tasks.

Based on my tests, it demonstrated remarkable improvements over GPT-4o in several areas:

- More accurate code generation

- Better understanding of complex codebases

- Improved debugging capabilities

- More precise follow-through on specific coding instructions

Testing mentioned in provided materials showed that GPT-4.1 was able to analyze a dataset with 188 leads much more accurately than GPT-4o, which mistakenly identified 848 leads.

This highlights GPT-4.1’s improved accuracy when processing structured data.

Content Creation and Analysis

For content creation tasks, the results are more mixed.

Some users report that GPT-4.1 feels “less human” in its responses compared to GPT-4o, though it does appear to use fewer em dashes – a stylistic quirk that had become a telltale sign of AI-generated content.

In my experience, GPT-4.1 produces more concise and focused content, while GPT-4o tends toward more conversational, sometimes verbose responses.

Whether this is an improvement depends on your specific needs and preferences.

Who Should Use Which Model: Finding Your AI Match

Based on my testing and analysis of the available data, here are my recommendations for different user groups:

For Developers and Power Users (API)

If you require maximum context and control, the API versions offer significant advantages:

- GPT-4.1 API: Best for demanding coding tasks, large codebases, and projects requiring the full 1 million token context. The increased output token limit of 32,768 (up from GPT-4o’s 16,384) is also beneficial for generating longer code segments.

- Gemini 2.5 Pro API: Consider this for tasks requiring superior reasoning capabilities and advanced multimodal features, especially with its competitive 1 million token context.

- Claude 3.7 Sonnet API: Excellent for tasks demanding meticulous reasoning within its 200K context window.

For my personal coding projects, I consistently get better results with Claude 3.7 Sonnet Thinking and Gemini 2.5 Pro than with GPT-4.1, despite what the benchmarks suggest.

Their reasoning capabilities appear to translate into more robust and elegant code solutions, especially for complex problems.

For ChatGPT Plus/Pro/Team Users

For those using the ChatGPT interface with paid subscriptions:

- GPT-4.1: Ideal for coding, precise instruction following, and web development within your tier’s context limits. Its more recent knowledge cutoff (June 2024) is also an advantage.

- GPT-4o: Still the go-to for multimodal tasks, image generation, and more conversational interactions.

For ChatGPT Free Users

Free users now have access to GPT-4.1 mini, which offers enhanced capabilities compared to the previous GPT-4o mini, particularly in coding and instruction following, all within an 8K context window.

If coding is your primary need, consider exploring Claude 3.7 Sonnet Thinking or Gemini 2.5 Pro, which I’ve found superior despite the benchmark numbers.

The Future of Context Windows: What’s Next?

The disparity between API and ChatGPT context windows raises questions about future developments.

Will OpenAI eventually bring the full 1 million token context to ChatGPT users?

There are several factors to consider:

- Computational Costs: Serving large context queries to millions of simultaneous users requires substantial resources.

- Competitive Pressure: With Google’s Gemini 2.5 offering 1 million tokens to all users, OpenAI may feel pressure to upgrade ChatGPT’s context window.

- Performance at Scale: According to DailyBot, reports suggest that accuracy in GPT-4.1 decreases from approximately 84% at 8K tokens to around 50% at the full 1 million token capacity, indicating challenges in effectively utilizing very large contexts.

It’s reasonable to expect that as hardware and architectural improvements emerge, we’ll see ChatGPT’s context limitations expand.

OpenAI may eventually upgrade ChatGPT’s context windows to compete more directly with Gemini 2.5, especially if the gap becomes a competitive disadvantage.

Frequently Asked Questions About GPT-4.1 in ChatGPT

What is GPT-4.1 context limit in ChatGPT?

The GPT-4.1 context limit in ChatGPT depends on your subscription tier: 8K tokens for Free users (using GPT-4.1 mini), 32K tokens for Plus and Team users, and 128K tokens for Pro and Enterprise users.

These are significantly smaller than the 1 million token context available through the API version.

How does GPT-4.1 compare to GPT-4o in ChatGPT?

GPT-4.1 outperforms GPT-4o in coding tasks (with a 21.4% improvement on benchmarks), instruction following, and long-context comprehension.

It generally provides more concise responses with fewer stylistic quirks like em dashes.

However, GPT-4o retains advantages in multimodal capabilities for handling images and audio.

Is GPT-4.1 1 million context available in ChatGPT Plus?

No, ChatGPT Plus users are limited to a 32K token context window with GPT-4.1, not the full 1 million tokens available in the API version.

Only API users can access the complete 1 million token context capability.

What are the context limits for ChatGPT users with GPT-4.1?

ChatGPT Free users get 8K tokens with GPT-4.1 mini, Plus and Team users get 32K tokens with GPT-4.1, and Pro and Enterprise users get 128K tokens with GPT-4.1.

These limits are the same as the previous GPT-4o model.

Which is better GPT-4.1 or Claude 3.7 Sonnet for coding?

According to LiveBench benchmarks, GPT-4.1 and Claude 3.7 Sonnet perform similarly on coding tasks (73.19% score for both).

However, in my personal experience, I consistently get better results with Claude 3.7 Sonnet Thinking for coding tasks, especially complex ones.

Its methodical reasoning approach seems to produce more robust and elegant code solutions despite what the raw benchmark numbers suggest.

Does GPT-4.1 mini have 1 million context in ChatGPT Free?

No, despite the API version supporting 1 million tokens, GPT-4.1 mini in the free tier of ChatGPT is limited to just 8K tokens of context.

This significant restriction limits its ability to process long documents or maintain extensive conversation history.

What is the difference between GPT-4.1 API and ChatGPT version?

The primary difference is context window size: API users get the full 1 million token context, while ChatGPT users receive tier-based limitations (8K/32K/128K).

Additionally, the API version has an increased output token limit of 32,768 tokens, providing more flexibility for developers creating applications that require longer outputs.

How much context does ChatGPT Pro get with GPT-4.1?

ChatGPT Pro users get 128K tokens of context with GPT-4.1, which is the highest available in the ChatGPT interface but still significantly less than the 1 million tokens available through the API.

Is GPT-4.1 better than Gemini 2.5 Pro?

According to LiveBench data, Gemini 2.5 Pro outperforms GPT-4.1 in overall scores (78.99 vs. 62.99), particularly excelling in reasoning (88.25 vs. 44.39) and mathematics (88.63 vs. 62.39).

In my personal experience, Gemini 2.5 Pro delivers superior coding results despite the benchmarks showing GPT-4.1 being competitive in coding metrics.

For ChatGPT users, the limited context window is a significant disadvantage compared to Gemini 2.5 Pro’s full 1 million token context.

Conclusion: Progress with Limitations

The introduction of GPT-4.1 and GPT-4.1 mini to ChatGPT represents a positive step in bringing OpenAI’s latest advancements to a wider audience.

These models offer notable improvements in coding, instruction following, and precision compared to their predecessors.

However, the significant context window limitation compared to the API version creates a substantial gap between what’s theoretically possible with these models and what’s actually available to ChatGPT users.

This disparity is especially notable when compared to competitors like Gemini 2.5, which offers its full 1 million token context to all users.

In my view, understanding these distinctions is crucial for setting realistic expectations and making informed choices about which model and platform best suit your specific needs.

While ChatGPT’s interface offers accessibility and ease of use, those requiring extensive context handling might need to consider API access or alternative platforms.

As the AI landscape continues to evolve at a breathtaking pace, today’s limitations may become tomorrow’s standard features.

It will be interesting to see how OpenAI responds to competitive pressure and whether they’ll eventually bring the full context capabilities to their flagship ChatGPT product.

Until then, I recommend choosing your model thoughtfully based on your specific requirements, considering not just the model’s capabilities but also the platform-specific limitations that might affect your experience.